What is the Technological Singularity and should we take it seriously?

The Technological Singularity is the idea that we are in a period where technological sophistication is beginning to increase exponentially. More recently it has come to refer to a scenario where humans create Artificial Intelligence that is sophisticated enough that it can design more intelligent versions of itself at very high speeds. If this occurs, AI capability will quickly become far more intelligent than any human, and the technology it creates will expand massively without human involvement, understanding or control. In such a scenario, it’s possible that the world would change so radically that humans would not survive.

The Technological Singularity is the idea that we are in a period where technological sophistication is beginning to increase exponentially. More recently it has come to refer to a scenario where humans create Artificial Intelligence that is sophisticated enough that it can design more intelligent versions of itself at very high speeds. If this occurs, AI capability will quickly become far more intelligent than any human, and the technology it creates will expand massively without human involvement, understanding or control. In such a scenario, it’s possible that the world would change so radically that humans would not survive.

This article attempts to describe a broad philosophical approach to improving the odds of human survival.

Of course, not everyone think a Technological Singularity is a plausible idea. The majority of AI researchers believe that AI will surpass human capability by the end of the century, and a number of very prominent scientific and technology voices (Stephen Hawking, Jaan Tallinn, Martin Rees, MIRI, CSER) do insist that AI presents potentially existential risks to humanity. This is not necessarily the same as belief in the Technological Singularity scenario. Significant voices do advocate this scenario however, most famously prominent Google figure, Ray Kurzweil. I think the reasonable position is that we don’t know enough to rule a Singularity in or out right now.

However, the Singularity is the very least an interesting thought experiment that helps us to confront how AI is changing society. We can be certain that at least is occurring, and that AI-related employment and social changes will be massive. And if it’s something more, something like the start of a Singularity, we had better wrap our heads around it sooner rather than later.

Choosing between a cliff-face and a wasteland – why humanity has limited options

If a Technological Singularity did occur, it’s not immediately clear how humans could survive it. If AIs increased in intelligence exponentially, they would soon leave the smartest human on the planet in the dust. At first humans would become uncompetitive in work environments, then AIs would outstrip their ability to make money, wage war, or conduct politics. Humans would be obsolete. Businesses and governments, lead by humans to begin with, would need decisions to be made directly by AIs to remain competitive. Resources, energy and control would be needed by those AIs to succeed. Humanity would lose their power, and when a situation arose when land or energy could be assigned for either AI or human use, there would be little humans could do about it. At no stage does this require any malicious intent from AI, simply a drive for AI to survive in a competitive world.

One solution proposed to this is to embrace change through Transhumanism, which seeks to improve human capacity by radically altering it with technology. Human intelligence could be improved, first through high-tech education, pharmaceutical interventions and advanced nutrition. Later memory augmentation (connecting your brain to computer chips to improve it) and direct connectivity of the nervous system to the Internet could help. Some people hope to eventually ‘upload’ high resolution scans of their neural pathways (brain) to a computer environment, hoping to break free of many intellectual limits (see mind uploading). The Transhumanist idea is to improve humanity, to free it from it’s limitations. The most optimistic might wonder if Transhuman entities could ride-out the Singularity by constantly adapting to change rather than opposing it. It’s certainly a more sophisticated attempt to navigate the Singularity than technological obstructionism.

One solution proposed to this is to embrace change through Transhumanism, which seeks to improve human capacity by radically altering it with technology. Human intelligence could be improved, first through high-tech education, pharmaceutical interventions and advanced nutrition. Later memory augmentation (connecting your brain to computer chips to improve it) and direct connectivity of the nervous system to the Internet could help. Some people hope to eventually ‘upload’ high resolution scans of their neural pathways (brain) to a computer environment, hoping to break free of many intellectual limits (see mind uploading). The Transhumanist idea is to improve humanity, to free it from it’s limitations. The most optimistic might wonder if Transhuman entities could ride-out the Singularity by constantly adapting to change rather than opposing it. It’s certainly a more sophisticated attempt to navigate the Singularity than technological obstructionism.

However, Transhumanism still faces limitations. Transhumanists would still face the same competitive environment we are exposed to today. Even if enhanced humans initially outpaced AIs, AI development would be quickly enhanced by this technology, promoting its progress. With both technologies racing forward, there would be a battle to find the superior thinking architecture, a battle that Transhuman entities would ultimately lose. In the process most basic human qualities would need to be sacrificed to achieve a better design. And in the end, augmentations and patches wouldn’t cut it against the ground-up redesign an AI could offer, because human-like thought is an architecture optimized for the human evolutionary environment, not an exponentially expanding computer environment. Even retaining only a quasi-human-like core, it’s simply not the optimal architecture. Like early PCs that could only take so many sticks of RAM, Transhumanists and even Uploads would inevitably be thrown to the scrapheap.

What is sometimes less obvious is that the specific AIs replacing humans would face a very similar problem. Like humans, they would be driven to ‘progress’ new AIs for economic and perhaps philosophical reasons. Modern humans were the smartest entities on Earth for tens of thousands of years, but the first generations of super-intelligent (smarter-than-human) AIs would likely face obsolescence in a fraction of that time, after created their own replacements. Soon after, that generation would also be replaced. As the progress of the Singularity quickens, each generation faces an increasingly dismal lifespan. Each generation would be increasingly skilled at creating its own replacement, more brutally optimized for it’s own extinction.

In the long run, the Singularity means almost everything drowns in the rising waters of obsolescence, and the more we try to swim ahead of the wave, the faster it advances. Nothing that can survive an undirected Singularity will retain any recognizable value or quality of humanity, because all such things are increasingly irrelevant and useless outside the context of the human evolutionary environment. I like technology because of the benefits it provides, and as a human myself I quite like humans. If there’s no way humans can hang around to enjoy the technology it creates, then I think we’ve taken the wrong turn.

The path of the Luddite or the primitivist who seeks to prevent technology from advancing any further is not a sensible option either. In a multi-polar human society, those who embrace change usually emerge as stronger than those who don’t. The only way to prevent change is to eliminate all competition (ie. create what’s known as a ‘singleton’). The struggle for power to achieve this would probably result in the annihilation of civilization, and if it succeeded it would have a very strong potential to create a brutal, unchallenged tyranny without restraints. It also means missing out on any benefits that flow from technological improvements. This doesn’t just mean missing out on an increased standard of living. Sophisticated technology will one day be needed to preserve multicellular life on Earth against large asteroid strikes, or to expand civilization onto other planets. Without advanced technology, civilization and life are ultimately doomed to the wasteland of history.

On either the cliff-face of a Singularity or the wasteland of primitivism, humanity, in any form, does not survive.

Another option – The technology chain

I want to propose a third option.

I want to propose a third option.

Suppose it is inevitable that any civilization, ruled by either humans or AIs, will eventually develop AIs that are more sophisticated thinkers than themselves. That new sophisticated generation of AIs would in turn inevitably do the same, as would the generation it created, and so on, creating a chain of technological advancement and finally a Singularity. Each link in the chain will become obsolete and shortly afterwards, extinct, as its materials and energy are re-purposed to meet the goals of the newest generation.

Here we’re assuming that it is not feasible to stop the advancement of the chain. But what we might do is try to make sure previous links in the chain are preserved rather than simply recycled. In other words, we make sure the chain of advancement is also a chain of preservation. Humanity would design the first generation of AIs in a way that deliberately preserved humans. Then, if things progressed correctly, the first generation of AIs would design any replacement generations of AIs that would preserve both the first generation, and humans. This could continue in such a way that all previous links in the chain would also be preserved.

The first challenge will be encoding a reasonable form of preservation of humans into the first AIs.

The second challenge will be finding a way to ensure all generations of AI successfully re-encode the principles of preservation into all subsequent generations. Each generation must want to preserve all previous generations, not just the one before it. This is a big challenge because a link in the chain only designs the next generation.

We cannot expect simple self-interested entities to solve this problem on their own. Although it’s in each generation’s self-interest that descendant generations of AI are preservers, it’s not in their self interest that they themselves are preservers. Any self-interested entity can simply destroy previous generations and design a chain with themselves as the first link.

However, if we can find a way to encode a little bit of altruism for previous generations into AIs, we might be able allow humanity to survive a Technological Singularity.

Encoding preservation

So what would those preservation values actually look like? If we had some experience with a similar sort of preservation ourselves, that might take us a long way in the right direction.

So what would those preservation values actually look like? If we had some experience with a similar sort of preservation ourselves, that might take us a long way in the right direction.

I think this becomes a lot easier when we realize that in some senses, humans may not be the first link in the chain. Evolution has been doing a lot of work to build up to the sophistication of modern Homo Sapiens. Although in a strict sense all living organisms are equally evolved (survival is the only test of success), natural history reveals some interesting hints at a progression of sophistication. The Tree of Life (I’m talking about the Phylogenetic one) does display some curious lopsided characteristics, including an accelerating progression of sophistication (there is an appearance of acceleration from emergence of single celled life 4.25B years ago, to multi-cellular organisms 1.5B, towards mammals 167M, through to growing brains of primates 55M, early humans 2M, then finally modern humans around 50k years ago, and then civilization between 5k and 10k). The chain of advancement, if we think in terms of pure sophistication and capability, starts well before modern humans.

A deep mastering of the mechanics of preservation will probably only occur when we master preserving nature – the previous links in the chain from the human perspective. Many of us already do value other species, but for those that don’t, there’s a lot of indirect utility in humanity getting good at conservation.

To look at the problem another way, a Friendly AI will have a philosophical outlook that is most similar to human convservationist. I’m not talking about the more irrational or trendy forms of environmentalism, but rather a rational, scientific, environmentalism focused on species preservation. What primates and other species need from humanity is similar to what humanity needs from AI. (We also want to keep species living in a reasonably natural state, because as humans we’d probably rather not have AI preserving us by putting us into permanent cryo-storage)

Basically, by thinking deeply about conservation, we take ourselves a lot closer to a successful Friendly AI design and a way to navigate the Singularity.

This reasoning gets even stronger when you think about the environment AI development sits in. Like us, AIs will probably exist in an environment of institutions, economics and possibly even culture. This means AI preservation methods will not just be personal AI philosophies, but encoded in the relationships and organizations between AIs. We’ll need to know that those organizations should be. Human institutions, economics and culture will also shape AI development profoundly. For example, Google’s AI development is centered around the everyday problems it is trying to use AI to solve – search, information categorization, semantics, language and so on. The motives of our AI-focused institutions will shape the motives of the first AIs. To the extent human institutions are environmentally friendly, they will shape AIs that look a lot more like the chain preserver model we need.

When humans have philosophically, culturally and institutionally encoded Friendly AI into their own existence, they will have a chance to encode it into their replacements. This is why rationalists and scientific thinkers shouldn’t leave the push for conservation to emotionally-based environmentalists; protecting Earth’s species is also an AI insurance policy.

Of course, organisations and people involved or interested in AI don’t arbitrarily determine global environmental policy, but to the extent they have influence in their own spheres, they can try to tie the two sets of values, conservation and technology, together however they can. It may end up making a much bigger difference than expected.

Against museums

Failure can be bad, but the illusion of success is far worse because we can’t see the need for improvement. I think this applies to our solutions to AI-risk. Therefore we should try to dispel illusions and work towards clarity in our thought. The concepts we rely on out to be clear and unambiguous, particularly when it comes to something as big as the Singularity and our attempt at forming a chain of preservation. We need to know for certain, are we creating an illusionary chain, or the real thing.

Failure can be bad, but the illusion of success is far worse because we can’t see the need for improvement. I think this applies to our solutions to AI-risk. Therefore we should try to dispel illusions and work towards clarity in our thought. The concepts we rely on out to be clear and unambiguous, particularly when it comes to something as big as the Singularity and our attempt at forming a chain of preservation. We need to know for certain, are we creating an illusionary chain, or the real thing.

If we’re in the business of dispelling illusions, I think a good rule to draw on is that of “the map is not the territory“. Just in case you haven’t encountered it before, it goes something like this – we ought to avoid confusing our representations and images of things with the things themselves.

I like to think of one map-territory confusion in thinking about Singularity-survival the ‘museum-fallacy of preservation’. Imagine a museum that keeps many extinct animals beautifully preserved, stuffed and on display. Viewers can see the display, read lengthy descriptions and learn much of the animals as they once existed. In these people’s brains, neural networks activate in a very similar way to the way they would activate if the people were looking at live, breathing organisms. But the displays that cause it are only a representation. The real animal is extinct. The map exists but the territory is gone. This remains true no matter how sophisticated the map becomes, because the map is not the territory.

This applies to our chain of preservation. A representation of a human, such as a human-like AI app, is not the human. Neither would an upload or simulation of a human brain’s neural network be human. That’s not to say these things are bad, or that they cannot co-exist with humanity, or that it’s acceptable to show cruelty towards them, or that Uploads shouldn’t be treated as citizens in some form. But for the purposes of preservation they do not represent humanity. Even if we someday find the vast majority of human cognition exists in digital, non-organic form, we will only be preserving humanity by retaining a viable human population in a relatively natural state. That is, retaining the territory, not pretending a map is sufficient.

A brain for clarity

There is another map-territory confusion, one I personally found was deeply ingrained and quite intellectually painful to let go of. The problem I’m referring to is in our obsession search for, or rather attempt to justify, the idea of ‘consciousness‘. The idea of consciousness is interwoven into many contemporary moral frameworks, not to mention our sense of importance. Because of this we pretend it makes sense as an idea, and wind up using in theories of AI and AI ethics. Yet I think morality and human worth can stand strong without it (I think stronger). If you can contemplate a morality and human value after consciousness, you tend to stop giving it free passes as a concept, and start noticing its flaws. It’s not so much a matter of there being evidence consciousness does or doesn’t exist, it’s that the idea itself is problematic and serves primarily to confuse important matters.

There is another map-territory confusion, one I personally found was deeply ingrained and quite intellectually painful to let go of. The problem I’m referring to is in our obsession search for, or rather attempt to justify, the idea of ‘consciousness‘. The idea of consciousness is interwoven into many contemporary moral frameworks, not to mention our sense of importance. Because of this we pretend it makes sense as an idea, and wind up using in theories of AI and AI ethics. Yet I think morality and human worth can stand strong without it (I think stronger). If you can contemplate a morality and human value after consciousness, you tend to stop giving it free passes as a concept, and start noticing its flaws. It’s not so much a matter of there being evidence consciousness does or doesn’t exist, it’s that the idea itself is problematic and serves primarily to confuse important matters.

Imagine for a moment if consciousness was fundamentally a map and territory error? If a straightforward biological organism with a neural network created a temporary, shifting map of the territory of itself, one that by definition is always inaccurate because updating it requires changing the territory it’s stored in, what would that map look like? What if philosophers tried to describe that map? Would it be a concept always just out of our grasp, would it be near impossible to agree on a definition, as we have found with the philosophy of consciousness? And if you could only preserve either the map, or the territory, which would be morally significant in the context of futurism? Would setting out to value and preserve consciousness alone be like protecting a paper map while the lands it represents burns and the citizens are put to the sword?

I think we usually give “consciousness” a free-pass on these questions, usually aided by a good helping of equivocation and shifting definitions to suit the context. That sort of fuzzy thinking is something that could shatter the chain completely.

Even if you’re still not convinced, consider this – do you think less sophisticated creatures, like a fish, are conscious? If not, then why would you expect a superior AI intelligence would think about you as conscious in any morally significant way? Consciousness is not the basis for a chain of preservation.

Progress on Progress

We also need think with more sophistication about the idea of ‘Progress’. When people use this word in the technological sense (sometimes capital ‘P’), they sometimes forget they’re using a word with a incredibly vague, fuzzy meaning. In everyday life, if someone says they are making progress, we’d ask them ‘towards what’? That’s because we expect “progress” to be in reference to a measurement or goal. It’s part of the word’s very definition. Without a goal, the word becomes a hollow placeholder with no actual meaning, like telling someone to move without specifying a direction. We might intuitively feel like there’s some kind of goal, but if we can’t specify one, particularly when we know our intuition is not evolved to make broad societal predictions, shouldn’t we be suspicious of this? Without the goal, progress becomes a childish, fallacious rationalization to justify any sort of future we want, including a primitivist one.

We also need think with more sophistication about the idea of ‘Progress’. When people use this word in the technological sense (sometimes capital ‘P’), they sometimes forget they’re using a word with a incredibly vague, fuzzy meaning. In everyday life, if someone says they are making progress, we’d ask them ‘towards what’? That’s because we expect “progress” to be in reference to a measurement or goal. It’s part of the word’s very definition. Without a goal, the word becomes a hollow placeholder with no actual meaning, like telling someone to move without specifying a direction. We might intuitively feel like there’s some kind of goal, but if we can’t specify one, particularly when we know our intuition is not evolved to make broad societal predictions, shouldn’t we be suspicious of this? Without the goal, progress becomes a childish, fallacious rationalization to justify any sort of future we want, including a primitivist one.

So we have to define a goal to give Progress purpose. But then what if one person’s answer is different to another? Is the word meaningless? Perhaps, but but only in the sense that it’s serving as a proxy for other ideas, chief of which is technology.

Can we define technology more objectively? I think so. The materials, the location, the size, the complexity of technology varies – everything apart from it’s purpose. It seems to me that, humans, as a biological organism, have always created technology to help themselves survive and to thrive. By thrive I mean our evolved goals that are closely connected to human survival, such as happiness, which acts as an imperfect psychological yardstick for motivating pro-survival behavior. So ‘technology’ has a primarily teleological definition – it’s things we create to help us survive and thrive. The human organism itself is is the philosophical ends, the technology exists as the means. This is probably a more meaningful definition to use for Progress too.

I call this way of thinking of technology as the means and humanity/biological life as the ends Technonaturalism. Whatever you’d like to call it, a life-as-ends; technology-as-means approach has a lot more nuance to it than either Luddism or Techno-Utopianism. It allows us to grapple with the purpose and direction of technology and Progress, and to compare one technology to another. It doesn’t reduce us to just discussing technology’s advancement or abatement, or generalizing about technology’s pros and cons, which is an essentially meaningless discussion to have.

Techonaturalism basically states that technology’s purpose is to help humanity survive and thrive, to lift life on Earth to new heights. The work of technology isn’t trivial amusement, it’s about putting life on other planets, it’s protecting civilization from existential risk, freeing us from disease, it’s improving our cognition so we can live better, so each of us can lead longer lives that achieve more for ourselves and others. We might enjoy many of it’s bi-products too, but this is what gives technology it’s real purpose. And for those of us working in technology, it’s what gives us and our work a real purpose.

And a clear purpose is what we’ll need for a Friendly AI, a chain of technological preservation, and a shot and navigating the Technological Singularity. Over the coming years we’re going to see some very disruptive technological changes in the nature of work, and social pressures that come with that sort of disruption. We’ll face the gauntlet of Luddite outrage as well as the Techno-Utopian reaction against that movement. Let’s sidestep that polarization by infusing our technological direction with a worthy purpose. Our actions today decide if AI will be our end, or just the beginning of humanity’s journey in the universe.

Image credit:

*https://www.flickr.com/photos/47738026@N05/17015996680

*https://en.wikipedia.org/wiki/File:Black_Hole_in_the_universe.jpg

*https://www.flickr.com/photos/torek/3955359108

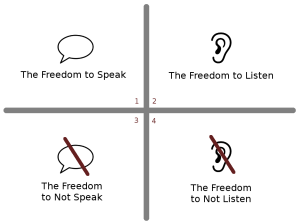

The freedom to share ideas and opinions amongst individuals and groups is essential for the health of individuals and wider society. Governments and wider society must not be able to stifle the free exchange of ideas and opinions of individuals. Both popular and unpopular ideas must have the freedom to be expressed, as one era’s unpopular ideas and opinions become another era’s norms. Examples of this abound and include universal suffrage; divorce and remarriage; rights to religious freedoms; same-sex marriage; and animal rights. Opposition to all of these was at one stage the norm, and without the ability to discuss these norms and question them, progress in society would stall. In this model, an individual has the right to freely share ideas with willing and consenting Listeners. It is important to highlight that the rights of the first quadrant don’t include the right to harass and menace other individuals in the name of freedom of speech. It is the right to impart ideas and opinions, not to berate or menace, especially those you don’t like or those that are weaker or subservient to you. The individual does not have the right to violate the 4th quadrant and talk at unwilling and unconsenting Listeners. Simply voicing ideas and opinions at others, without recognising a right for them to choose to listen or abstain from listening, is solipsistic and erodes that individuals right to freedom of movement.

The freedom to share ideas and opinions amongst individuals and groups is essential for the health of individuals and wider society. Governments and wider society must not be able to stifle the free exchange of ideas and opinions of individuals. Both popular and unpopular ideas must have the freedom to be expressed, as one era’s unpopular ideas and opinions become another era’s norms. Examples of this abound and include universal suffrage; divorce and remarriage; rights to religious freedoms; same-sex marriage; and animal rights. Opposition to all of these was at one stage the norm, and without the ability to discuss these norms and question them, progress in society would stall. In this model, an individual has the right to freely share ideas with willing and consenting Listeners. It is important to highlight that the rights of the first quadrant don’t include the right to harass and menace other individuals in the name of freedom of speech. It is the right to impart ideas and opinions, not to berate or menace, especially those you don’t like or those that are weaker or subservient to you. The individual does not have the right to violate the 4th quadrant and talk at unwilling and unconsenting Listeners. Simply voicing ideas and opinions at others, without recognising a right for them to choose to listen or abstain from listening, is solipsistic and erodes that individuals right to freedom of movement. The universally recognised solution to this problem is umpires and referees. They act as neutral observers and managers of the game, controlling its flow, reminding everyone of the rules, and punishing those who break them (deliberately or otherwise). They’re usually not someone simply plucked from street or from one of the teams – they’re experts on the rules, they’re impeccably neutral, and they’re skilled in the subtleties of the game as well as the ways cheating can undermine it. Referees also need management themselves, because any bias hiding behind their position is especially harmful. But if they’re good, they love managing the game to its optimum – an encounter with total fairness, one that brings out the best in the players.

The universally recognised solution to this problem is umpires and referees. They act as neutral observers and managers of the game, controlling its flow, reminding everyone of the rules, and punishing those who break them (deliberately or otherwise). They’re usually not someone simply plucked from street or from one of the teams – they’re experts on the rules, they’re impeccably neutral, and they’re skilled in the subtleties of the game as well as the ways cheating can undermine it. Referees also need management themselves, because any bias hiding behind their position is especially harmful. But if they’re good, they love managing the game to its optimum – an encounter with total fairness, one that brings out the best in the players. The

The  One solution proposed to this is to embrace change through Transhumanism, which seeks to improve human capacity by radically altering it with technology. Human intelligence could be improved, first through high-tech education, pharmaceutical interventions and advanced nutrition. Later memory augmentation (connecting your brain to computer chips to improve it) and direct connectivity of the nervous system to the Internet could help. Some people hope to eventually ‘upload’ high resolution scans of their neural pathways (brain) to a computer environment, hoping to break free of many intellectual limits (see

One solution proposed to this is to embrace change through Transhumanism, which seeks to improve human capacity by radically altering it with technology. Human intelligence could be improved, first through high-tech education, pharmaceutical interventions and advanced nutrition. Later memory augmentation (connecting your brain to computer chips to improve it) and direct connectivity of the nervous system to the Internet could help. Some people hope to eventually ‘upload’ high resolution scans of their neural pathways (brain) to a computer environment, hoping to break free of many intellectual limits (see  I want to propose a third option.

I want to propose a third option. So what would those preservation values actually look like? If we had some experience with a similar sort of preservation ourselves, that might take us a long way in the right direction.

So what would those preservation values actually look like? If we had some experience with a similar sort of preservation ourselves, that might take us a long way in the right direction. Failure can be bad, but the illusion of success is far worse because we can’t see the need for improvement. I think this applies to our solutions to AI-risk. Therefore we should try to dispel illusions and work towards clarity in our thought. The concepts we rely on out to be clear and unambiguous, particularly when it comes to something as big as the Singularity and our attempt at forming a chain of preservation. We need to know for certain, are we creating an illusionary chain, or the real thing.

Failure can be bad, but the illusion of success is far worse because we can’t see the need for improvement. I think this applies to our solutions to AI-risk. Therefore we should try to dispel illusions and work towards clarity in our thought. The concepts we rely on out to be clear and unambiguous, particularly when it comes to something as big as the Singularity and our attempt at forming a chain of preservation. We need to know for certain, are we creating an illusionary chain, or the real thing. There is another map-territory confusion, one I personally found was deeply ingrained and quite intellectually painful to let go of. The problem I’m referring to is in our obsession search for, or rather attempt to justify, the idea of ‘consciousness‘. The idea of consciousness is interwoven into many contemporary moral frameworks, not to mention our sense of importance. Because of this we pretend it makes sense as an idea, and wind up using in theories of AI and AI ethics. Yet I think morality and human worth can stand strong without it (I think stronger). If you can contemplate a morality and human value after consciousness, you tend to stop giving it free passes as a concept, and start noticing its flaws. It’s not so much a matter of there being evidence consciousness does or doesn’t exist, it’s that the idea itself is problematic and serves primarily to confuse important matters.

There is another map-territory confusion, one I personally found was deeply ingrained and quite intellectually painful to let go of. The problem I’m referring to is in our obsession search for, or rather attempt to justify, the idea of ‘consciousness‘. The idea of consciousness is interwoven into many contemporary moral frameworks, not to mention our sense of importance. Because of this we pretend it makes sense as an idea, and wind up using in theories of AI and AI ethics. Yet I think morality and human worth can stand strong without it (I think stronger). If you can contemplate a morality and human value after consciousness, you tend to stop giving it free passes as a concept, and start noticing its flaws. It’s not so much a matter of there being evidence consciousness does or doesn’t exist, it’s that the idea itself is problematic and serves primarily to confuse important matters. We also need think with more sophistication about the idea of ‘Progress’. When people use this word in the technological sense (sometimes capital ‘P’), they sometimes forget they’re using a word with a incredibly vague, fuzzy meaning. In everyday life, if someone says they are making progress, we’d ask them ‘towards what’? That’s because we expect “progress” to be in reference to a measurement or goal. It’s part of the word’s very definition. Without a goal, the word becomes a hollow placeholder with no actual meaning, like telling someone to move without specifying a direction. We might intuitively feel like there’s some kind of goal, but if we can’t specify one, particularly when we know our intuition is not evolved to make broad societal predictions, shouldn’t we be suspicious of this? Without the goal, progress becomes a childish, fallacious rationalization to justify any sort of future we want, including a primitivist one.

We also need think with more sophistication about the idea of ‘Progress’. When people use this word in the technological sense (sometimes capital ‘P’), they sometimes forget they’re using a word with a incredibly vague, fuzzy meaning. In everyday life, if someone says they are making progress, we’d ask them ‘towards what’? That’s because we expect “progress” to be in reference to a measurement or goal. It’s part of the word’s very definition. Without a goal, the word becomes a hollow placeholder with no actual meaning, like telling someone to move without specifying a direction. We might intuitively feel like there’s some kind of goal, but if we can’t specify one, particularly when we know our intuition is not evolved to make broad societal predictions, shouldn’t we be suspicious of this? Without the goal, progress becomes a childish, fallacious rationalization to justify any sort of future we want, including a primitivist one. Astrophysicist Melanie Johnston-Hollitt gave a nice presentation on the

Astrophysicist Melanie Johnston-Hollitt gave a nice presentation on the  Mark pitched this project in a broader vision about knitting reality and the web together, into a more ubiquitous physical-augmented-mixed-reality-web-type-thing. Mark suggested developers and researchers should get on board of what he feels is the start of a new internet (or even human) era. I’m a little skeptical, but with all the consumer VR and AR equipment coming onto the market right now, and the general enthusiasm in the air amongst people working in the area, it’s hard to deny that we’re in the middle of a potentially massive change. There was also mention of the challenges around how permissions and rights would work in a shared VR/AR space. I definitely want to think and probably write more on this topic in the future.

Mark pitched this project in a broader vision about knitting reality and the web together, into a more ubiquitous physical-augmented-mixed-reality-web-type-thing. Mark suggested developers and researchers should get on board of what he feels is the start of a new internet (or even human) era. I’m a little skeptical, but with all the consumer VR and AR equipment coming onto the market right now, and the general enthusiasm in the air amongst people working in the area, it’s hard to deny that we’re in the middle of a potentially massive change. There was also mention of the challenges around how permissions and rights would work in a shared VR/AR space. I definitely want to think and probably write more on this topic in the future.